Some very bright people think that the quest for artificial intelligence could become an existential threat to humanity.

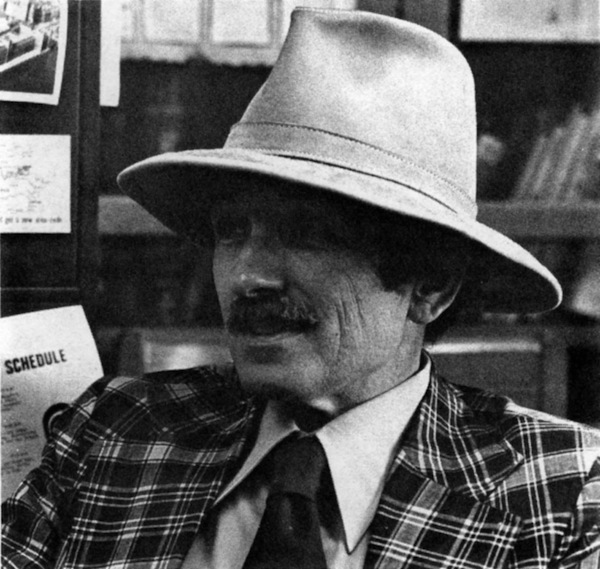

I.J. Good during his time as Professor of Statistics at Virginia Polytechnic Institute and State University (USA). PHOTO: Big Ear Radio Observatory, North American AstroPhysical Observatory

Irving Good was a brilliant statistician and a World War II code-breaker with the legendary Alan Turing. He was also fascinated by what he called “ultra-intelligent machines”.

In 1965 he wrote about computers that could outsmart any person, which themselves could design and build ever-smarter machines, leading to an “intelligence explosion” in which human intelligence was left far behind.

“Thus the first ultra-intelligent machine is the last invention that man need ever make,” he wrote. Provided, he added, “that the machine is docile enough to tell us how to keep it under control.”

The idea of computers able to think like people – artificial intelligence or AI – has engaged some of our brightest minds for decades. Their conclusions haven’t always been positive.

Mathematician Vernor Vinge, futurist Ray Kurzweil and philosopher Nick Bostrum are among those signalling that for all its value in serving humanity, AI could become a dangerous adversary.

“Success in creating AI would be the biggest event in human history,” wrote physicists Stephen Hawking, Frank Wilczek, Stuart Russell and Max Tegmark in the online journal Huffington Post in 2014. “Unfortunately, it might also be the last, unless we learn how to avoid the risks.”

Such technology could end up outsmarting financial markets, scientists and political leaders, and “developing weapons we cannot even understand,” they wrote. The initial issue about AI would be who controls it, but “the long-term impact depends on whether it can be controlled at all.”

AI is about computers able to perceive their environment and use that information for their benefit. They might be autonomous machines, but they might also be big, complex systems.

Most current AI research is being done by big IT corporations including Google, Facebook and Apple, and research groups funded by them. When genetic codes were cracked the question was asked, will corporate profits trump the public good? The same question applies for AI.

The debate around uncontrolled super-intelligence and corporate AI interests grew steadily through 2015, led by Elon Musk of Tesla Motors and SpaceX fame.

Musk is a private entrepreneur, just like the people behind Google and Apple, but unlike them he’s opened up. Having (for good business reasons) made computer source code for Tesla electric cars freely available, Musk is now seeking to have the same thing happen to AI research.

OpenAI is a non-profit AI research company launched just before Christmas in Montreal, Canada. Unlike its corporate competitors OpenAI doesn’t have to turn a profit. Thanks to Musk and other benefactors it has a billion-dollar kitty to do its own research and make results publicly available.

Open AI asks, should we be concerned about AI? “If left in the hands of giant, for-profit tech companies, could it spiral out of control? Could it be commandeered by governments to monitor and control their citizens, and could it ultimately destroy humankind?”

AI ethics research is booming. Tegmark is a co-founder of the Boston-based Future of Life Institute, while across the Atlantic at Oxford there’s the Future of Humanity Institute led by Bostrom and at Cambridge the Centre for the Future of Intelligence, established just last month.

IT interests are fighting back. Just before Christmas Robert Atkinson, president of the Washington-based Information Technology and Innovation Foundation, heavily criticised Bostrom, Musk and others, calling them “neo-Luddites” who want to stifle innovation and eliminate risk.

Claiming that Musk of all people is anti-innovation is quite funny, but this is politics at work. ITIF is a lobby group pursuing support for technological innovation. From that perspective, public disquiet about AI is not to be tolerated.

A couple of final thoughts. There’s a line of speculation that human intelligence will be amplified by the artificial kind, using virtual reality technology, so that it stays ahead of the smartest AI.

And intelligence of the human kind is the product of living bodies in a living world. Could the infinite richness of this biological experience be the X-factor that keeps it on top?

Regardless, the AI debate is worth having. To make sense of the universe we can use the services of super-smart machines, but we must be super-sure they know their place, always.